Autonomous testing vs automated testing: Why AI script generators don't solve the QA scaling bottleneck

Your AI-powered test automation tool writes Selenium scripts 10x faster. You still have the same scaling problem.

AI script generators like Cypress with GPT or Playwright plugins help speed up test writing. However, they do not fix the QA scaling problem. The bottleneck isn't execution speed or script writing. It's test case definition and maintenance at scale. Engineering leaders face a tough choice. They can hire more QA staff as their team grows from 50 to 200 engineers. This option is expensive and takes 3-4 months to onboard each new hire. The other option is to accept slower release cycles, which can be a competitive risk.

AI script generators still require humans to define test cases

AI test automation works like this. It takes a test goal defined by a person, such as "check the login process." Then, it quickly generates code for Selenium or Playwright. The human still decides what to test, when to test it, and maintains the test definition. The system writes the script, but someone has to tell it what script to write. That's not eliminating the bottleneck. That's moving it.

Autonomous generation works differently. The system reads Figma designs and commit messages to determine test cases without human definition. When Figma shows an "Add to Cart" button, the system generates tests for cart functionality automatically. No human writes "test add to cart flow." The system understands intent from design specs, not from human instructions.

AI testing use went up from 7% in 2023 to 16% in 2025. The focus is on test generation, self-healing frameworks, and predictive analytics (Testlio Industry Statistics 2025). Teams adopted the tools. They still face the scaling bottleneck. Adoption doesn't equal solving the problem. It means writing scripts faster while still maintaining them manually.

The real bottleneck: Test case definition and maintenance at scale.

Traditional automation requires 60% of QA engineering time on regression test maintenance. Every refactor breaks tests tied to CSS selectors, DOM structure, or component names. Developer renames .button-primary to. btn-main , and the test fails despite behavior being identical. Thetest was coupled to implementation details, not user intent.

Here's the scaling math. Your team grows from 50 to 200 engineers. If test coverage requires 1 QA per 10 developers, that's 5 to 20 QA hires. Each takes 3-4 months to onboard and understand your product. Test script maintenance grows proportionally with engineering headcount. The more you build, the more tests you maintain.

You face the decision: hire proportionally (expensive, slow) or accept slower releases (competitive risk). Traditional automation doesn't change this equation. It shifts the bottleneck from manual test execution to script maintenance. That's not scaling. That's rearranging the problem.

Design-driven test generation: Testing intent, not implementation

Intent-based testing architecture changes the equation. Tests generated from Figma design specs test user behavior ("confirmation message appears after checkout"), not implementation details ( .checkout-confirmation-modal ). Design specs remain stable even when code changes. When developers refactor components or restructure the DOM, tests tied to design intent survive. Tests tied to CSS selectors break immediately.

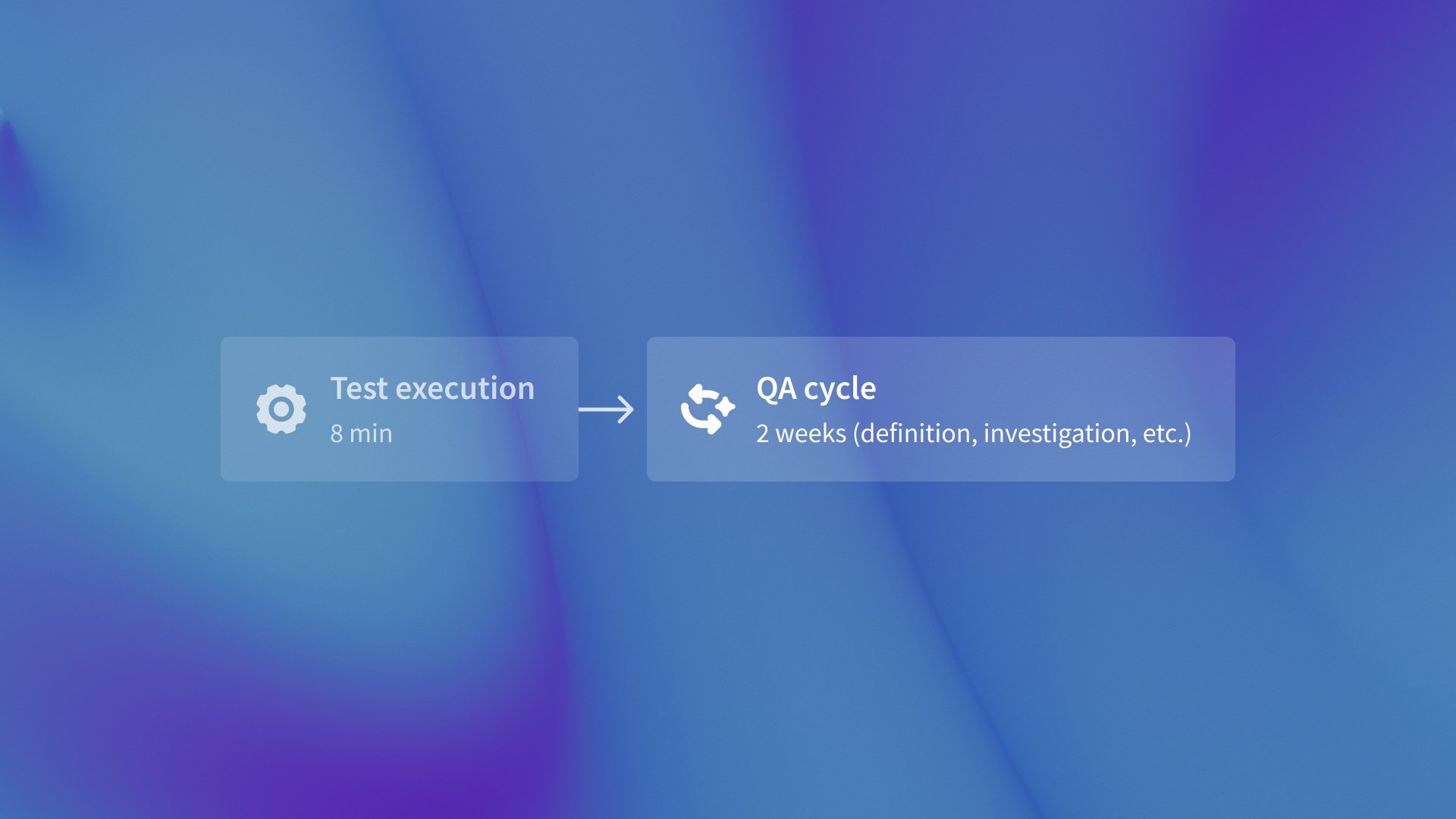

QA flow caught 847 bugs with zero human-written test cases. QA cycle time reduced from 2 weeks to 3 days. The bottleneck wasn't execution speed. It was the human time required to define, write, and maintain those 847 test scenarios. The system generated test cases from designs and commits, eliminating human definition entirely.

Using the QA flow's audit tool, teams can check their test coverage. They can find gaps without needing to define test cases manually. The system reads your Figma designs, understands user flows from screen transitions, and identifies what needs testing automatically.

The competitive reality: 80% adoption doesn't mean 80% success

80% of software teams will adopt AI-driven testing by 2025-2026 (ThinkSys QA Trends Report 2026). But adoption doesn't equal solving the problem. Many teams adopt AI script generators and still face the QA scaling bottleneck. They write tests faster but maintain them manually. The fundamental constraint remains unchanged.

Here's the distinction that matters: automated testing means the system runs what humans defined. Autonomous testing means the system defines what to test from design specs. This isn't semantic. It's architectural. Only autonomous generation eliminates the scaling bottleneck because it removes human test case definition entirely.

Teams that adopt AI script generators still hire QA proportionally or slow down releases. Teams that adopt autonomous generation scale test coverage with engineering commits, not QA headcount. That's the difference between writing code faster and eliminating the bottleneck entirely.

What this actually means

AI-powered script generators don't solve the QA scaling problem. They write code faster while humans still define and maintain test cases. Autonomous testing eliminates the bottleneck by generating test cases from Figma designs and GitHub commit messages. QA cycle time from 2 weeks to 3 days. 847 bugs caught. Zero human-written test cases.

That's not faster test writing. That's eliminating test case definition entirely.

.svg)

.svg)

(1).png)

.png)

.png)