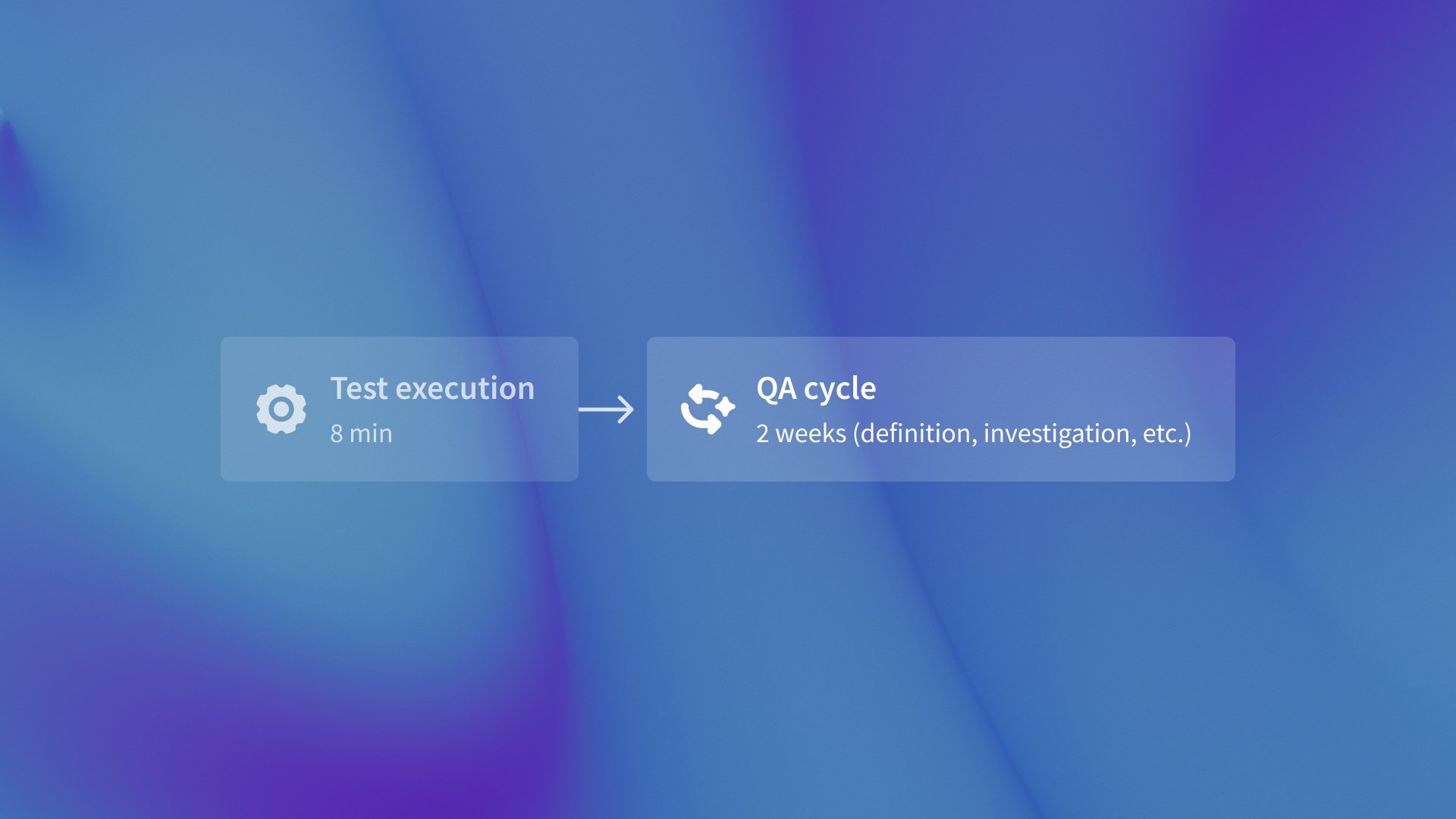

Your Test Suite Runs in 8 Minutes. Your QA Cycle Still Takes 2 Weeks.

You invested in Cypress. Parallelized your test execution. Got CI/CD running smoothly. Your test suite completes in under 10 minutes. Yet your QA cycle time for a typical release? Still 1-2 weeks.

The math doesn't add up. If test execution is 10 minutes and your cycle is 2 weeks, execution accounts for less than 1% of total time. So what's eating the other 99%?

The Execution Speed Red Herring

The automation testing market is booming.

It is expected to grow from $28.1B in 2023 to $55.2B by 2028.This reflects a 14.5% CAGR.

CI/CD adoption is a key driver, according to the ThinkSys QA Trends Report 2026. Teams recognize that execution speed matters. But here's the disconnect: cycle times haven't improved proportionally.

Traditional automation optimizes running existing tests faster. It doesn't address defining new tests or investigating failures. Your Selenium scripts execute quickly, but someone still has to write them. Your Playwright tests catch bugs efficiently, but someone still has to investigate them and document reproduction steps.

When your test suite runs in 8 minutes but your QA cycle takes 2 weeks, execution speed isn't your bottleneck.

The Three Hidden Cycle Time Drivers

Here's where your QA cycle time actually goes.

Test case definition overhead. Your QA engineers spend 40-60% of their time writing test scenarios for new features and refactored code. Not executing tests-defining what to test and how to test it. Every new feature requires manually authored test cases. Every code refactor requires test maintenance.

Bug investigation tax. When QA finds a bug, they file a ticket. But it's incomplete-missing network logs, exact reproduction steps, or environment details. The developer requests clarification. QA reproduces the issue again. Developer asks follow-up questions. This back-and forth adds 2-3 round trips before a fix even starts.

Communication latency. Even when each step takes hours, async handoffs add days. QA finds bug → files ticket → developer requests clarification → QA reproduces → developer fixes → QA retests. Every handoff is a multiplier on cycle time.

These three overheads are why QA cycle time reduction requires more than faster test execution.

What Actually Changes the Equation

Regression cycles that once took weeks can now take days or even hours. AI-led testing makes this possible. It uses candidate case generation and impact analysis, according to Qadence AI 2025. Not faster execution-fundamentally different test generation.

Autonomous test generation from commits means AI agents read git diffs and design specs to generate test candidates. Zero time spent authoring test cases. The system determines what to test based on code changes and design intent.

Comprehensive bug tickets upfront means network logs, reproduction steps, severity classification, and environment details are captured automatically. Developers get complete context on first view. Zero follow-up questions. Zero ping-pong.

Concrete example: QA cycle time reduced from 2 weeks to 3 days with autonomous workflows. The transformation wasn't faster test execution—it was eliminating definition overhead and communication loops entirely. Tools like QA flow show this. They create tests on their own from Figma designs and GitHub commits. They also create detailed bug tickets. These tickets reduce the need for developer follow-up.

The Architectural Difference That Matters

AI-assisted test writing (like Copilot for Cypress) still leaves humans defining what to test. You're writing test cases faster, but you're still writing them. Autonomous systems generate test candidates from design intent and code changes. The system decides what needs testing based on actual changes.

Intent-based testing from Figma specs survives refactors. Implementation-based testing from CSS selectors breaks every time the DOM changes. One approach scales with your codebase. The other creates maintenance debt.

Zero human-written test cases caught 847 bugs with autonomous generation from Figma designs and GitHub commits. That's not humans writing tests faster-it's systems generating test coverage that humans wouldn't manually define. The qaflow.com/audit tool can analyze your current testing approach and identify where autonomous workflows would eliminate bottlenecks.

Where Human QA Engineering Goes Instead

Autonomous workflows don't replace QA engineers. They eliminate the regression grunt work that bottlenecks high-value contributions. Your QA team shifts from writing repeat test cases to exploratory testing. They focus on domain-specific edge cases. This helps them find 3x more critical bugs per hour.

The ROI math: A 60% cut in regression time shifts work to exploratory testing.

This testing finds issues autonomous systems miss, like UX validation and domain logic.

It also catches customer workflow edge cases. These are the bugs that actually hurt your users, and they require human judgment to identify.

This isn't about doing QA faster. It's about doing different QA.

The Real Cycle Time Equation

Test execution speed was never the QA bottleneck at scale. Test case definition, bug investigation, and communication overhead were. Autonomous workflows remove three bottlenecks. They create tests from commits. They collect full debugging context early. They close the loop without human handoffs.

The 2-week to 3-day transformation isn't about running tests faster. It's about eliminating the work that happens between test runs.

That's not automation. That's leverage.

.svg)

.svg)

(1).png)

.png)

.png)