Intent-based testing vs. implementation- based testing: Why CSS selectors break your test suite

Your test suite has 1000 tests. Engineering refactors the checkout flow. 800 tests break.

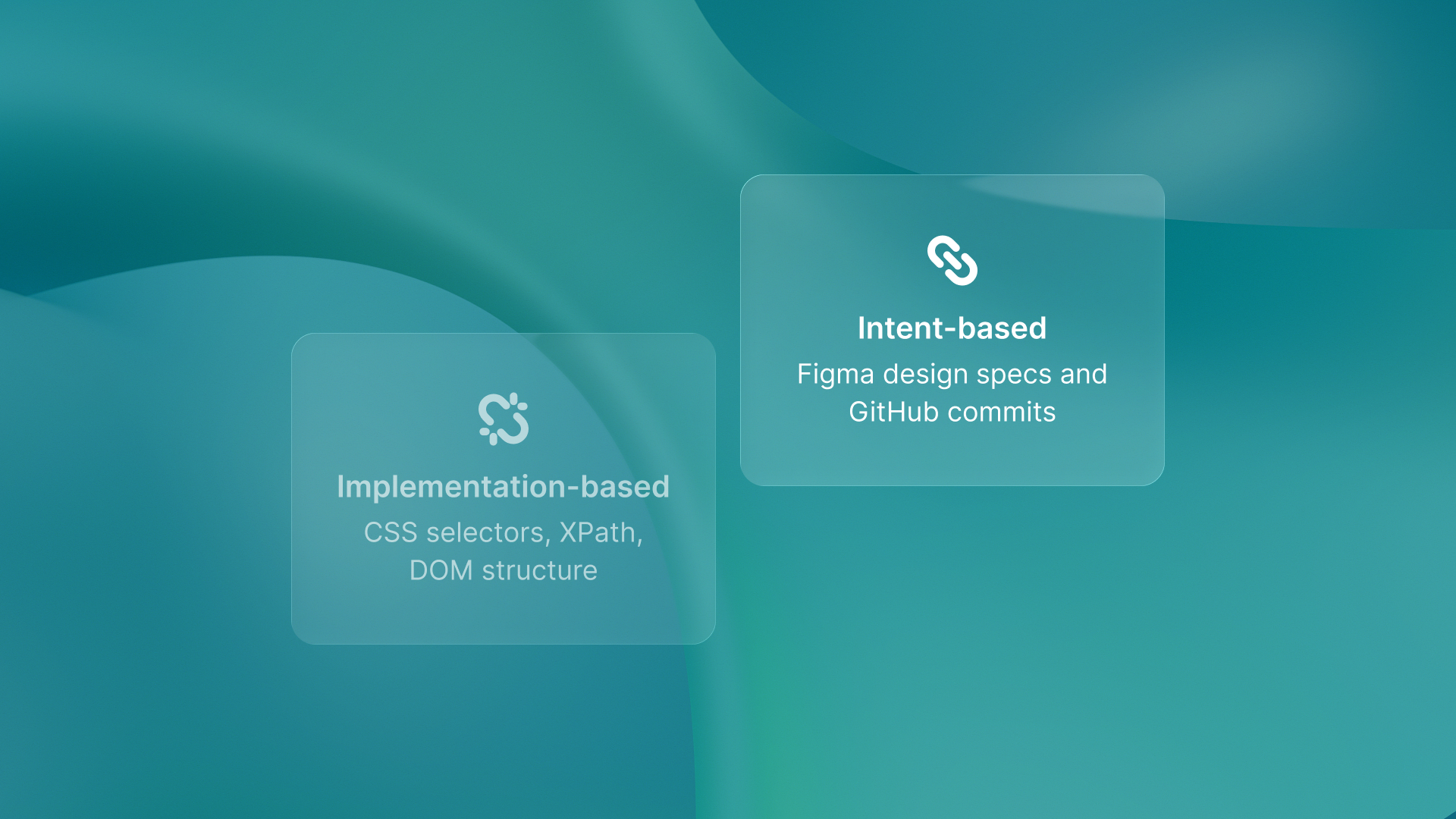

This isn't a test quality problem. It's an architecture problem. Traditional test automation couples tests to implementation details - CSS selectors, DOM paths, element IDs - that change constantly during normal development. Every refactor can turn into a test maintenance problem. This happens because tests depend on how the code is written, not on what the user wants to do.

The tension is real: tests must be stable enough to catch real bugs but specific enough to validate behavior. Implementation-based testing fails this balance every time. Intent-based testing solves it by anchoring tests to design specifications that don't change when code does.

Why CSS selectors make tests brittle

CSS selectors, XPath queries, and data-testid attributes are implementation details. They change during refactors, A/B tests, and design system migrations. When engineering changes a button from <button class="submit-btn"> to <div role="button" data-testid="checkout">, your test will need to change. You should use test.querySelector('[data-testid="checkout"]').click() instead. The user behavior is identical. The test is dead.

This cascades across your entire suite. One component refactor breaks tests across multiple features because tests are coupled to shared CSS classes or component structure. You're not testing user intent. You're testing DOM implementation.

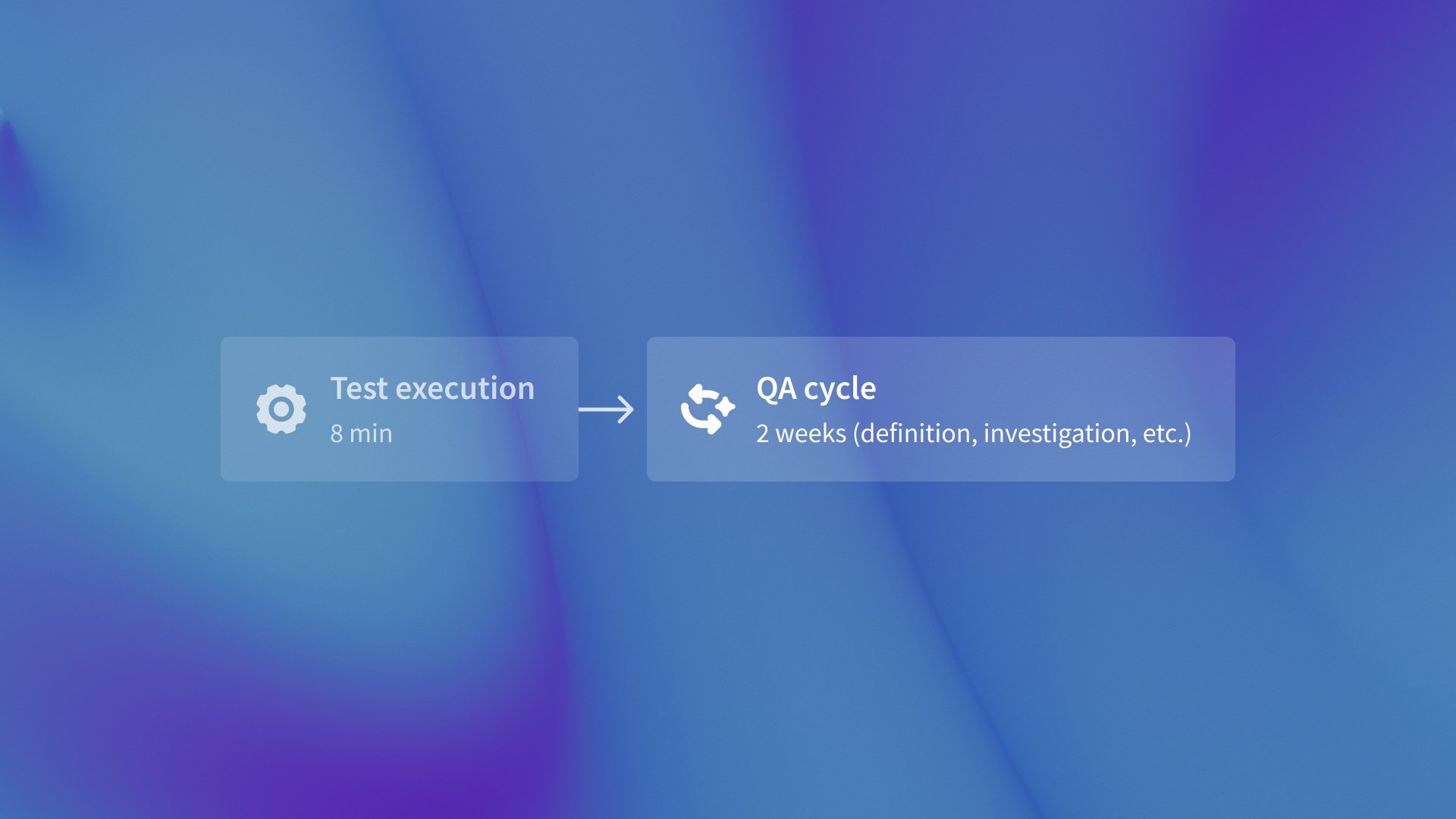

The test maintenance tax at scale

Regression testing takes up 40-50% of a QA team's time on average. About 60% of teams focus on automation. This helps free manual testers for exploratory work. (QA Solve AI Survey of 100+ Dev Teams 2025). That time isn't spent writing new tests. It's spent fixing tests broken by code changes, not actual bugs.

The math gets worse at scale. A 1000-test suite with 80% selector-based tests means 800 tests potentially break during a UI refactor. Engineering teams have a choice. They can skip important refactors, which leads to technical debt. Or, they can spend sprint cycles fixing test suites instead of delivering new features.

Bloomberg engineers cut regression cycle time by 70%. They did this by grouping and stabilizing flaky tests using AI methods (Qadence AI 2025). The problem wasn't execution speed. It was test stability during code evolution.

Intent-based testing: coupling to design specs instead of implementation

Here’s the design change:

- We will test based on Figma specifications.

- This means the user completes checkout by clicking the main button.

- We will not test based on the DOM structure.

- Previously, we tested by checking if the user clicks the element with the class

submit-btn.

Design specs define user intent and behavior flows. CSS selectors define implementation details. Intent doesn't change when you refactor from <button> to <div role="button"> .

QA flow generates tests from Figma designs and GitHub commits. Engineering refactors the checkout component three times. Tests remain valid because they're anchored to design intent, not CSS classes. The system caught 847 bugs with zero human-written test cases. Tests don't break during refactors because they're not coupled to code structure.

Try the qaflow.com/audit tool to analyze your current test suite's brittleness. It identifies what percentage of tests would break during a major component refactor. That percentage represents architectural technical debt, not test quality issues.

What this means for test automation architecture

Automated testing executes human-written tests tied to implementation. Autonomous testing generates tests from design specs that define intent. That's not a tooling difference. That's an architecture difference.

The 60% of QA time spent on regression testing can be used for exploratory testing. This type of testing finds three times more critical bugs each hour.

Audit your current test suite. Calculate what percentage would break during a major component refactor. That number tells you how much architectural technical debt you're carrying. Switching to intent-based testing needs design specifications like Figma or Sketch as the main reference. Teams without design-focused workflows will have a harder time adopting this change.

Test brittleness isn't a maintenance problem to be managed with better tooling or more QA engineers. It's an architecture problem that requires testing a different layer. That's not better automation. That's a different testing paradigm.

.svg)

.svg)

(1).png)

.png)

.png)