The QA scaling bottleneck: Why hiring or shipping slower both lose

Your engineering team is growing from 50 to 500 engineers. Your QA team isn't

This creates a trilemma that kills growth momentum. You can hire QA engineers proportionally (10-20 new hires, 10-month onboarding, $1.2M annually). You can accept 2-week QA cycles that constrain releases. Or you can implement traditional automation that just shifts the bottleneck to test maintenance. Most companies think automation solves this. The QA services market growing from $50.7B in 2025 to $107.2B by 2032 proves it doesn't. Companies are spending more on automation but still hiring QA at the same rate.

This post looks at the real costs of the QA scaling problem. It explains why changing the architecture, not just speeding up execution, is the only solution.

The math on hiring QA proportionally

Scaling from 50 to 500 engineers means hiring 10-20 QA engineers at the typical 1:25-50 ratio. At $69,000 median salary (US Bureau of Labor Statistics via Coursera), that's $690K to $1.38M annually in salary alone. Add recruitment costs, 10-month onboarding to understand your product domain and test infrastructure, tooling licenses, and training investment. The real number approaches $1.2M.

Here's a hidden cost that many people miss. The 10 months of ramp-up time cause delays in testing. This affects every new feature that is released during fast growth. Your QA team is always playing catch-up. By the time they're productive, your product has evolved and they're learning new workflows again. This math doesn't work for Series B-C companies that need to preserve runway while moving fast.

The compound cost of slower release cycles

If you can't hire QA proportionally, you accept longer cycle times. A 2-week QA cycle means biweekly releases at best. Your competitors shipping weekly get 26 releases per year versus your 13. Over 18 months, that's 39 versus 19 releases. They've tested twice as many hypotheses and incorporated twice as much market feedback.

In fast-moving markets like fintech, dev tools, and B2B SaaS, faster iteration wins. Slower releases mean losing competitive positioning while competitors learn faster from production data. This cost doesn't appear on your P&L, but it is real. It includes lost market share, missed chances, and slower product-market fit discovery. The QA scaling bottleneck compounds across every release cycle.

Why traditional automation doesn't solve the trilemma

Here's the paradox: the QA services market is growing from $50.7 billion to $107.2 billion. This is happening even though automation-led segments are growing faster (ThinkSys QA Trends Report 2026). If automation solved the problem, the market would shrink. Instead it's doubling. That means automation is shifting work, not eliminating it.

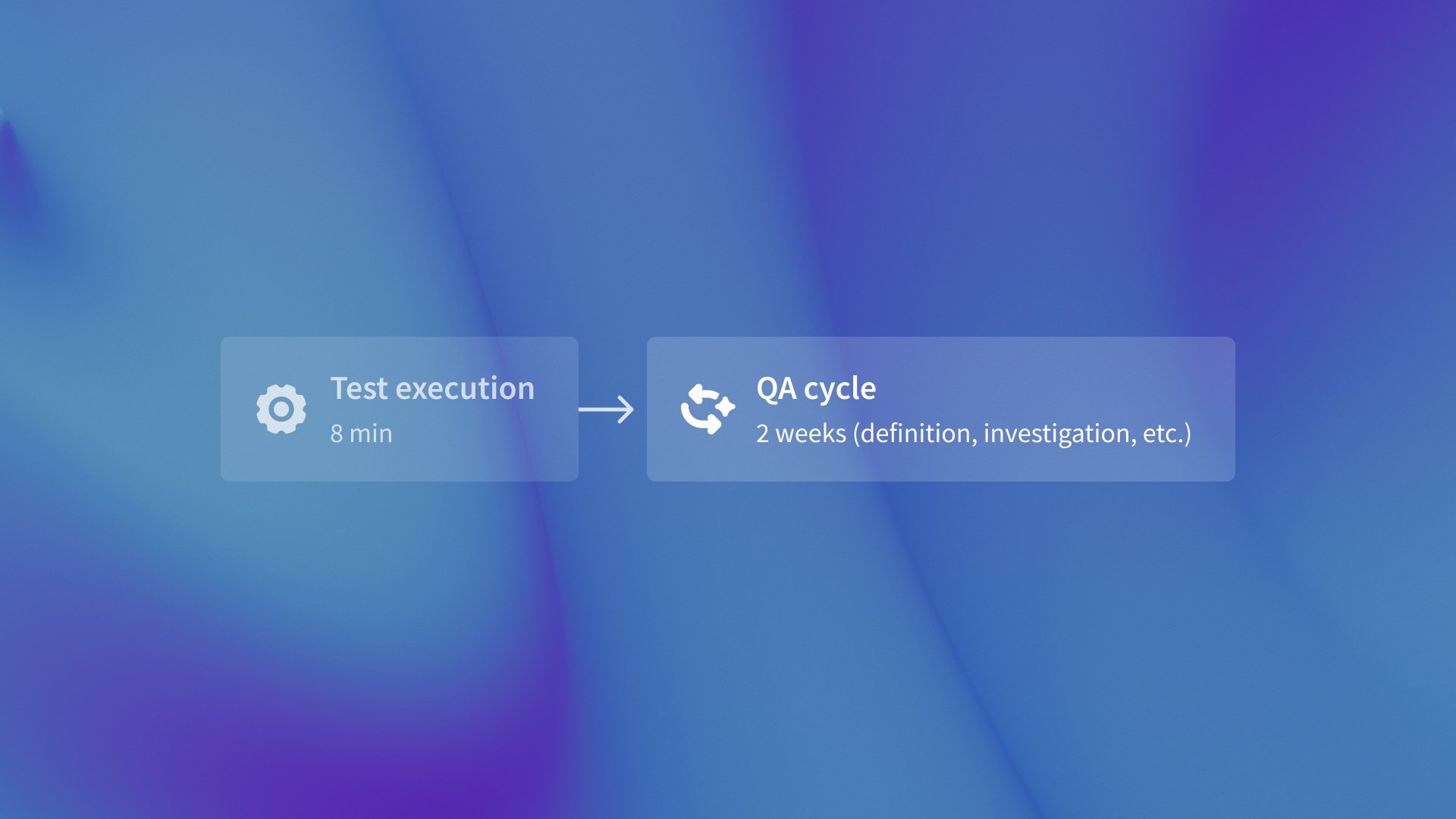

Traditional test automation speeds up execution, but humans still define test cases. Selenium, Cypress, and Playwright scripts are written by QA engineers or developers. That's still human-inthe- loop. Tests break during refactors when CSS selectors change, DOM structure updates, or API responses evolve. You've shifted the bottleneck from manual test execution to test script maintenance. The core problem at scale isn't execution speed. It's defining what to test and keeping tests valid as code changes.

The architectural shift: Autonomous vs. automated testing

Automated testing runs scripts humans wrote. Autonomous testing generates, executes, and reports without human-in-the-loop for test definition. That's the distinction that matters. Intent-based testing creates tests from Figma design specs and GitHub commit messages. It focuses on user behavior instead of implementation details like CSS selectors and DOM structure. When code refactors, tests stay valid because design intent hasn't changed.

QA flow generates tests on its own from design specs. It runs these tests at the same time on every push. It also makes detailed bug tickets with network logs in Jira and Linear. The result: 2 weeks to 3 days cycle time, 847 bugs caught, zero human-written test cases. Tools that use AI to generate Playwright scripts faster still require humans to define what to test. That's not autonomous. That's faster automation.

Breaking the trilemma: The economics of autonomous testing

Autonomous testing eliminates the need to hire QA proportionally because test generation scales with commits, not headcount. It accelerates release cycles to 3 days instead of 2 weeks. QA engineers move from repetitive tasks to important exploratory testing. They focus on user experience validation and specific edge cases. This helps them find three times more critical bugs each hour.

This doesn't replace human judgment on exploratory testing or UX validation. It eliminates the low-leverage work that creates the scaling bottleneck. The qaflow.com/audit tool analyzes your current test coverage and identifies where autonomous testing can reduce cycle time while maintaining coverage. Intent-based autonomous testing doesn't force a choice between hiring proportionally and shipping slower. It decouples test coverage from QA headcount while accelerating cycles.

Many companies are stuck in a tough spot. They focus on automation, which speeds up execution. However, they really need autonomy to eliminate test definitions. That's not a QA scaling problem. That's an architecture problem.

.svg)

.svg)

(1).png)

.png)

.png)