The hidden tax on test automation maintenance

You automated your tests. Maintenance costs went up, not down.

Your team invested in Selenium, Cypress, or Playwright to reduce QA costs and scale faster.

Instead, you're spending 20+ hours weekly updating broken test scripts after every refactor. Up to 50% of your automation budget now goes to maintaining scripts. This is according to the World Quality Report mentioned by IT Convergence 2025.

Traditional test automation doesn't solve the QA scaling problem.

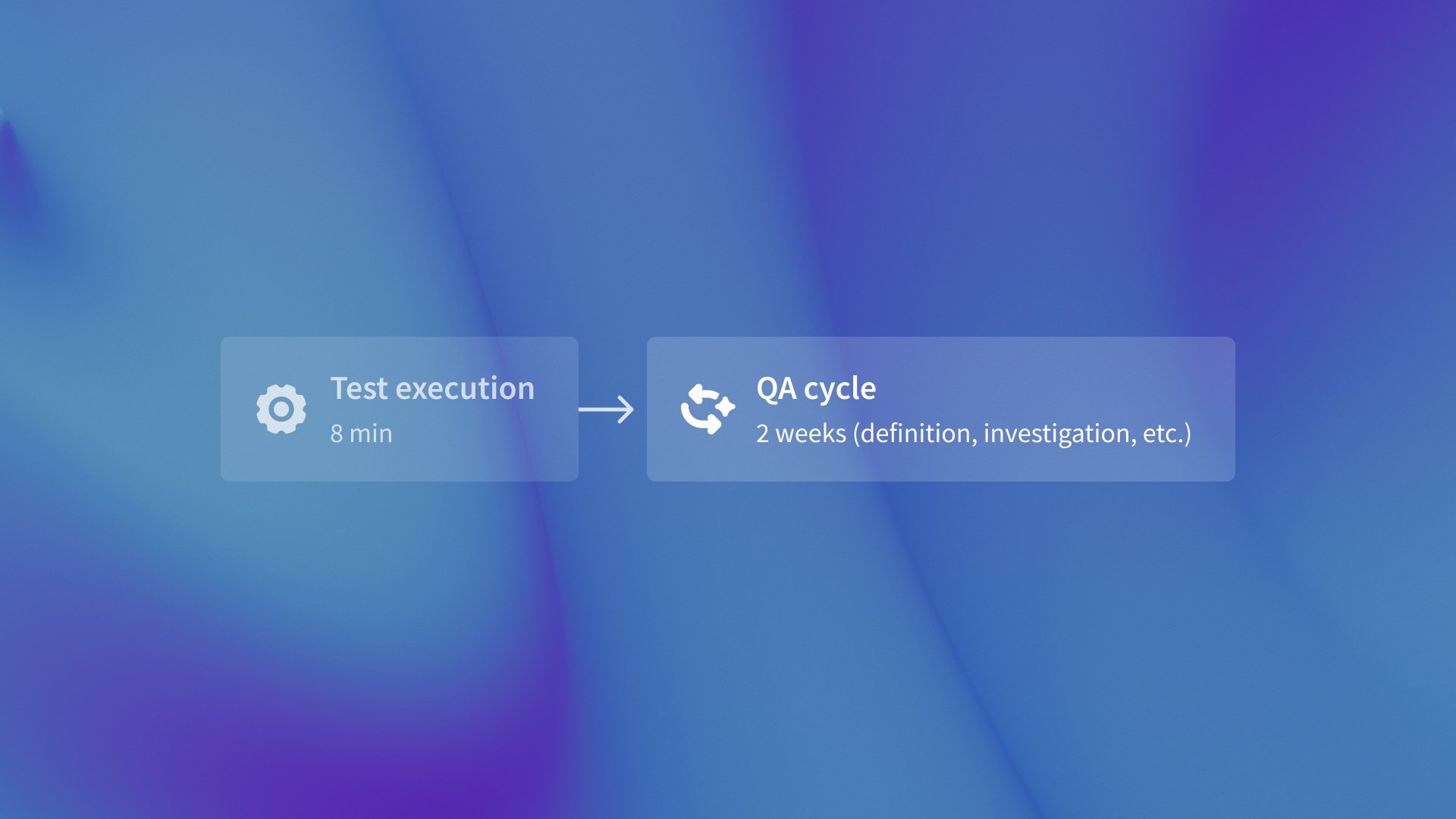

It shifts the bottleneck from test execution to test maintenance.

The hidden cost structure of test automation maintenance

The 50% maintenance budget includes specific tasks. These tasks are updating CSS selectors after UI changes. They also include fixing flaky tests that fail randomly. Refactoring test code is needed when application code changes. Lastly, investigating false positives wastes developer time.

A survey by A Rainforest QA Survey found that 55% of teams use Selenium, Cypress, and Playwright. These teams spend at least 20 hours each week on automated tests.That's 25-50% of a full-time QA automation engineer's capacity just maintaining existing tests, not writing new coverage.

The maintenance burden compounds as your codebase grows. As engineering teams scale from 50 to 500 engineers, test suite size grows proportionally, but maintenance complexity grows exponentially.

More developers making changes leads to more refactors. This causes more test breakage and more time spent keeping tests updated. Instead of finding new bugs, they focus on syncing tests with changes.

Why traditional automation doesn't solve the scaling problem

Automation solves test execution speed by running tests faster than humans can. It doesn't solve test definition scalability because humans still write every test case and maintain them as code changes.

You've moved from "we can't manually test everything" to "we can't maintain all our automated tests." The constraint moved, it didn't disappear.

Even AI-powered script generators like Playwright with AI or GitHub Copilot for test writing don't fix this.

These tools help write scripts faster, but humans still define what to test and scripts still break during refactors. They're productivity tools for the same paradigm, not a different approach to testing architecture.

Why tests break: implementation vs. intent

Implementation-based testing validates how the system works by checking CSS selectors, DOM structure, and API endpoint paths.

Tests break when implementation details change even if behavior remains correct.

Here's a concrete example: you refactor a button from one component library to another.

The DOM structure changes completely. This breaks all tests that use the button's CSS class. The user workflow, which is clicking the button to submit a form, has not changed at all.

Intent-based testing validates what the system should do according to design specifications, not how it's implemented in code.

Tests validate behavior and user intent, which survives refactors.

Tools like QA flow use this approach, generating tests from Figma specs rather than implementation details.

The autonomous testing alternative

Automated testing runs scripts humans wrote. Autonomous testing creates tests from design specs and commit messages. It runs these tests and makes bug tickets without any human-written test cases.

When tests are generated from design intent rather than implementation details, refactors don't require updating test scripts.

The same Figma spec creates valid tests for both the old and new versions. It checks if the form has an email field, a submit button, and a success message. This is true no matter if you use React Hook Form, Formik, or plain HTML.

The qaflow.com/audit tool analyzes your existing test coverage and identifies where implementation-based tests create maintenance debt.

QA automation engineers should stop spending 20 hours a week on test script maintenance. They can use that time for exploratory testing, UX validation, and specific edge cases that need human judgment.

What this means for your automation strategy

Your existing Selenium, Cypress, or Playwright infrastructure has value. It's just limited by the implementation-based paradigm that guarantees maintenance burden scaling with codebase complexity.

You have two choices.

You can continue using traditional automation. This will come with a 50% maintenance cost.

Alternatively, you can switch to autonomous testing. This new method removes the maintenance burden by focusing on testing intent instead of implementation.

The maintenance tax isn't a process problem or a tooling problem. It's an architectural problem.

Testing implementation details guarantees maintenance burden that scales with codebase complexity.

Testing intent from design specs eliminates that tax because behavior survives refactors.

That's not automation. That's leverage.

.svg)

.svg)

(1).png)

.png)

.png)