Why QA Bottleneck Determines Your Development Velocity

Development teams using AI copilots now ship 2-3x more features per sprint. QA capacity hasn't kept up.

The velocity mismatch is unprecedented. AI-driven development has created a gap. Teams can build features quickly, but they struggle to validate them just as fast. Without dedicated QA resources, developers spend 20-40% of their time on testing. This time competes with AI-driven feature development. Here's the main issue: top teams use their skills 208 times more often than low-performing teams. The key difference isn't talent or code quality. It's testing velocity. Traditional QA automation does not solve this problem. It automates execution, not generation. This means the bottleneck is still how many test cases a person can write.

The AI development acceleration reality

Teams using generative AI ship 2-3x more features per cycle. GitHub Copilot, Cursor, and AI pair programming can cut implementation time by 40-60%. They can turn tasks that took two weeks into just three days. This creates a QA crisis. More features lead to many more test cases. These include cross-browser combinations, edge cases, and integration scenarios. Meanwhile, QA capacity stays the same or only grows slowly through hiring.

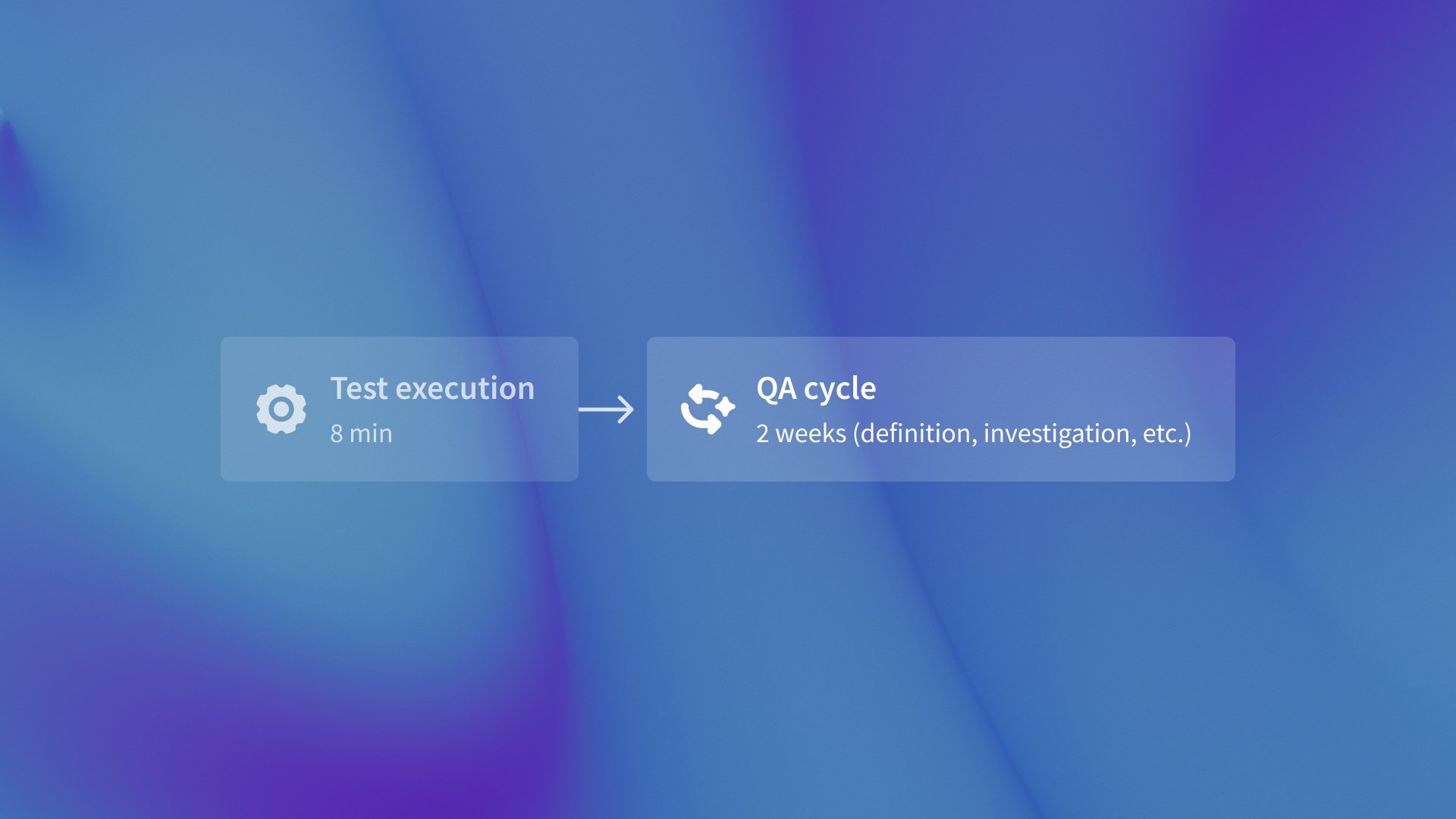

When development velocity was stable, QA automation could keep pace by executing human-written tests faster. But with 3x feature output, even automated execution can't compensate for manual test case creation. The bottleneck isn't running tests. It's writing them.

The math of QA scaling: Why hiring doesn't work

The 1-2 QA engineers per 5 developers ratio translates to real headcount. A 50-person engineering team needs 10-20 QA engineers at $120-150K annually per engineer, fully loaded. Ramp time to productivity is 3-6 months. Without dedicated QA, developers spend 20-40% of time on testing, which directly conflicts with AI-accelerated feature development. Teams either slow feature velocity to maintain quality or ship faster with declining coverage. Manual QA delays release cycles from daily or weekly to bi-weekly or monthly. This reduces the competitive edge of AI-driven development.

Why traditional automation doesn't solve the bottleneck

Selenium, Cypress, and Playwright automate test execution, not test generation. Humans still define what to test, write test cases, maintain selectors, and update tests when UI changes. Tools that suggest selectors or fix broken tests improve one part of the workflow. They make running tests more reliable, but they do not reduce the human effort needed to create test cases. Implementation-based tests break on refactors: CSS changes, DOM restructuring. Even when functionality is unchanged, constant human intervention is required to update test suites.

The 208x deployment frequency gap

Elite performers test 208 times more often. The main difference is testing speed. It is not about automation or CI/CD tools. It is about quickly creating and running complete test suites. Faster deployment cycles mean faster iteration on customer feedback, quicker bug fixes, and competitive advantage in time-to-market. QA bottlenecks limit how often teams can deploy. Even with great CI/CD tools, teams can only deploy as fast as they can check changes. If test generation takes 2 weeks, deployment frequency is capped at bi-weekly regardless of development speed.

Autonomous generation as the architectural solution

Autonomous means agents that understand design from Figma and code changes from GitHub. They create test cases without help from people. They run full test suites at the same time and make ready-to-use bug reports. This breaks the linear scaling constraint: test generation speed becomes independent of human QA capacity. Adding more features doesn't require proportionally more QA engineers because agents generate tests automatically. Tools like QA flow use intent-based testing. They check design specs, like what a button should do according to Figma. This is different from looking at implementation details, such as CSS selectors. This creates resilient test suites that survive refactors. The qaflow.com/audit The tool quickly analyzes SEO problems, broken links, and performance issues. It shows how automated generation shortens testing from two weeks to three days while keeping good coverage.

The QA bottleneck isn't a people problem or a tooling problem. It's an architectural problem. Traditional automation optimized execution speed while leaving test generation entirely manual. AI-accelerated development has made this mismatch untenable. Elite teams deploy 208x more frequently because they've solved test generation, not just execution. In 2026, QA velocity determines whether your AI-accelerated development creates competitive advantage or just creates a backlog. Autonomous test generation isn't a nice-to-have optimization. It's the difference between deploying daily and deploying monthly.

.svg)

.svg)

(1).png)

.png)

.png)