Your test automation saved $340K. It cost you $480K

Most engineering teams calculate automation ROI by comparing test execution time to manual testing hours.

That's not an ROI calculation. That's a fairy tale.

An insurance company projected $340K in annual savings from their test automation initiative.

They spent $480K on maintenance costs instead. Release velocity dropped 23%.

This wasn't an implementation failure or a vendor problem.

It was a fundamental miscalculation in how companies evaluate test automation ROI.

Traditional business cases compare automation to manual testing in isolation, completely ignoring maintenance costs and velocity impacts on the entire development pipeline.

This matters because 73% of automation projects fail for exactly this reason, according to VirtuosoQA's industry analysis.

The numbers don't lie, but the calculations do.

The standard ROI calculation (and why it's fiction)

Here's the typical automation business case: manual QA takes 200 hours per regression cycle at $50/hour ($10K per cycle). Automation reduces this to 20 hours.

Run 12 cycles per year, save $108K annually.

Add some tooling costs, and you're looking at $340K in projected savings over three years.

This calculation omits everything that matters.

Test script writing time.

Maintenance when code changes.

Test update bottlenecks blocking releases.

Developer time debugging flaky tests.

The coordination overhead of QA updating tests while developers wait for fixes.

The insurance company's numbers reveal the gap between projection and reality.

They calculated $340K in savings based on execution time.

They experienced $480K in actual costs plus 23% slower releases.

The difference isn't rounding error, it's measuring the wrong variables entirely.

The maintenance cost explosion

Implementation-based tests often fail because CSS selectors change during updates. The DOM structure evolves, API contracts shift, and UI components get renamed.

Every code change starts a review of the test suite. This includes updating broken selectors, fixing assertions, and checking test logic again.

The maintenance burden compounds as test suites grow. More tests create more maintenance surface area.

Faster development velocity generates more frequent breakage.

Projects that looked profitable in year one become cost centers by year two as maintenance scales with codebase size.

This is why 73% of test automation projects fail. The business case assumed tests would stay valid, but implementation-based testing breaks every time code evolves.

The failure rate is not just about bad tools or QA teams. It is about basic economics that traditional ROI calculations overlook.

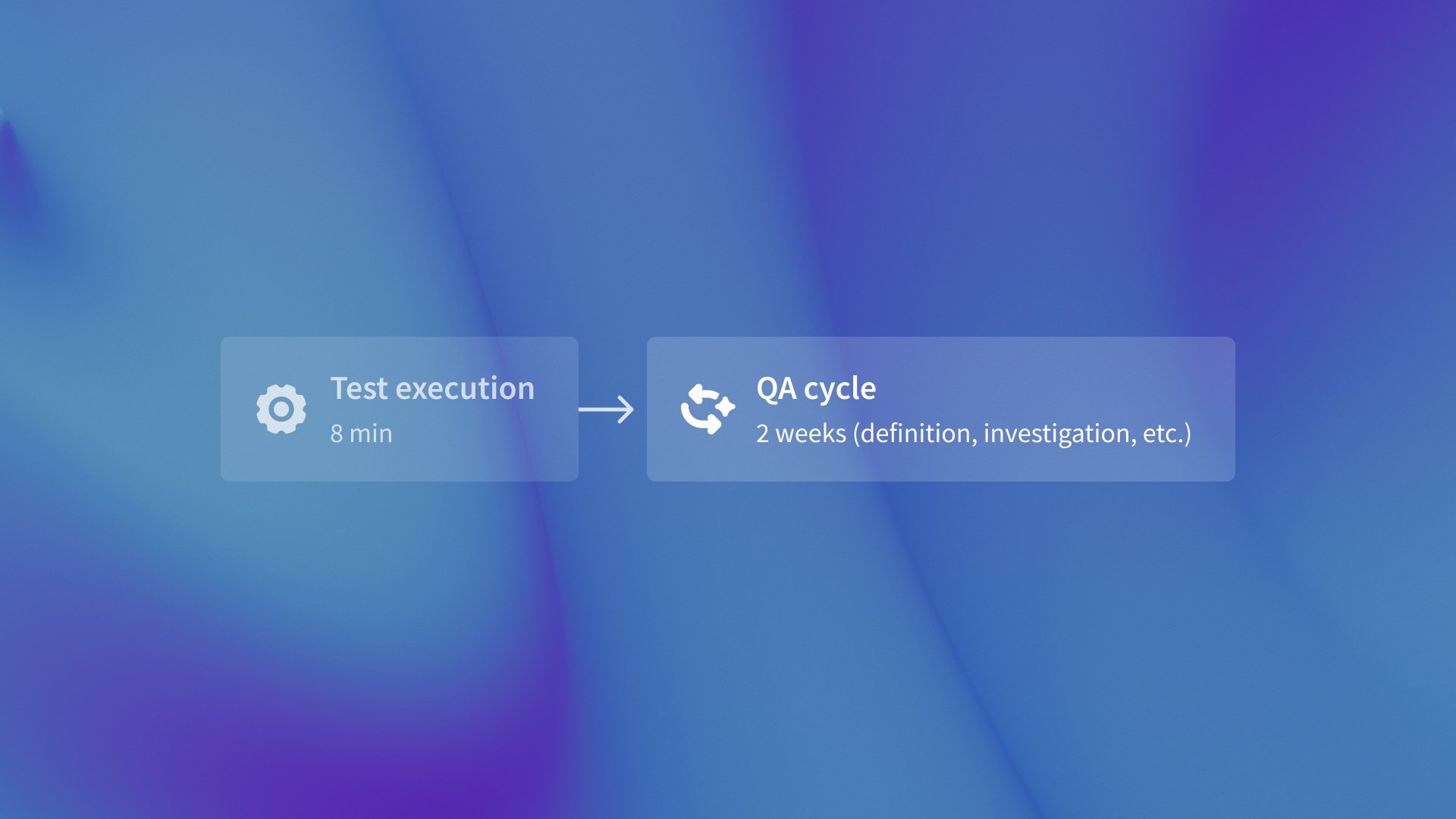

The velocity tax (how automation slows releases)

Broken tests block deployments. Engineers must fix tests before merging code changes.

This creates a bottleneck that compounds as test suites grow and code changes accelerate.

The insurance company experienced 23% slower releases despite automation.

The system that was supposed to accelerate velocity created a new critical path dependency instead.

QA updates tests, developers wait for test fixes, releases queue up behind test maintenance cycles.

In fast-moving markets, a 23% slower release means competitors can ship features first. This leads to longer user feedback loops and slower iteration speed.

The velocity tax transforms expected acceleration into actual deceleration.

The intent-based alternative

QA flow tests behavior from design specs rather than implementation details.

When a button changes from blue When a button changes from blue to green, intent-based tests still work. The spec states "submit button exists," not "blue button with class .btn-primary."to green, intent-based tests don't break because the spec says "submit button exists," not "blue button with class .btn-primary exists."

This architectural difference eliminates maintenance costs and preserves velocity gains as codebases evolve.

Testing intent instead of implementation means refactors don't trigger test updates.

The QA flow audit tool can check your current test suite. It helps find out how much maintenance work comes from implementation-based methods compared to behavior validation.

Autonomous testing systems that generate tests from Figma designs and user stories eliminate the test maintenance bottleneck entirely.

No human-written test cases means no test update cycles blocking releases.

The ROI equation shifts from marginal improvement to fundamental leverage.

The real calculation

Treating test automation as a cost reduction play (replace manual QA hours) guarantees the insurance company's outcome.

Manual testing scales linearly with features, but so does automated test maintenance when tests are implementation-based.

The better framework measures total business impact: release velocity, bug escape rates, developer productivity, and QA redeployment to high-value exploratory work.

Compare automation to manual testing and you'll optimize for execution time.

Measure the full impact of the pipeline. Include maintenance costs and speed. You will see why intent-based methods solve the problems that change expected savings into real losses.

That's not an automation problem. That's an architecture problem.

.svg)

.svg)

(1).png)

.png)

.png)